How to save 24kEUR in Azure Data Factory or Azure Synapse Analytics!

A few weeks ago, a client called me. They needed help with their on-premises data gateway to update Power BI on-premises data sources and required a new self-hosted integration runtime (SHIR) installation for their Azure Data Factory (ADF). While we prepared the machine and installed the SHIR, we talked about different topics like monitoring and cost optimization. Then, something happened I did not expect: their CIO informed me that the current cost was roughly 2,5kEUR per month! Further, I found out that the runtime of their pipelines to update their data warehouse (DWH) was increasing and sometimes failed or did not update in time to cover the analyst’s needs.

Why didn’t I expect this? Because the amount of data shifted through and transformations done in this ADF were never intended to be that high back when I drafted and set up the architecture.

Problem

- Very high ADF cost: per month (2,5kEUR +)

- Long runtime of pipelines to update the DWH

- Outdated data for analysts use cases

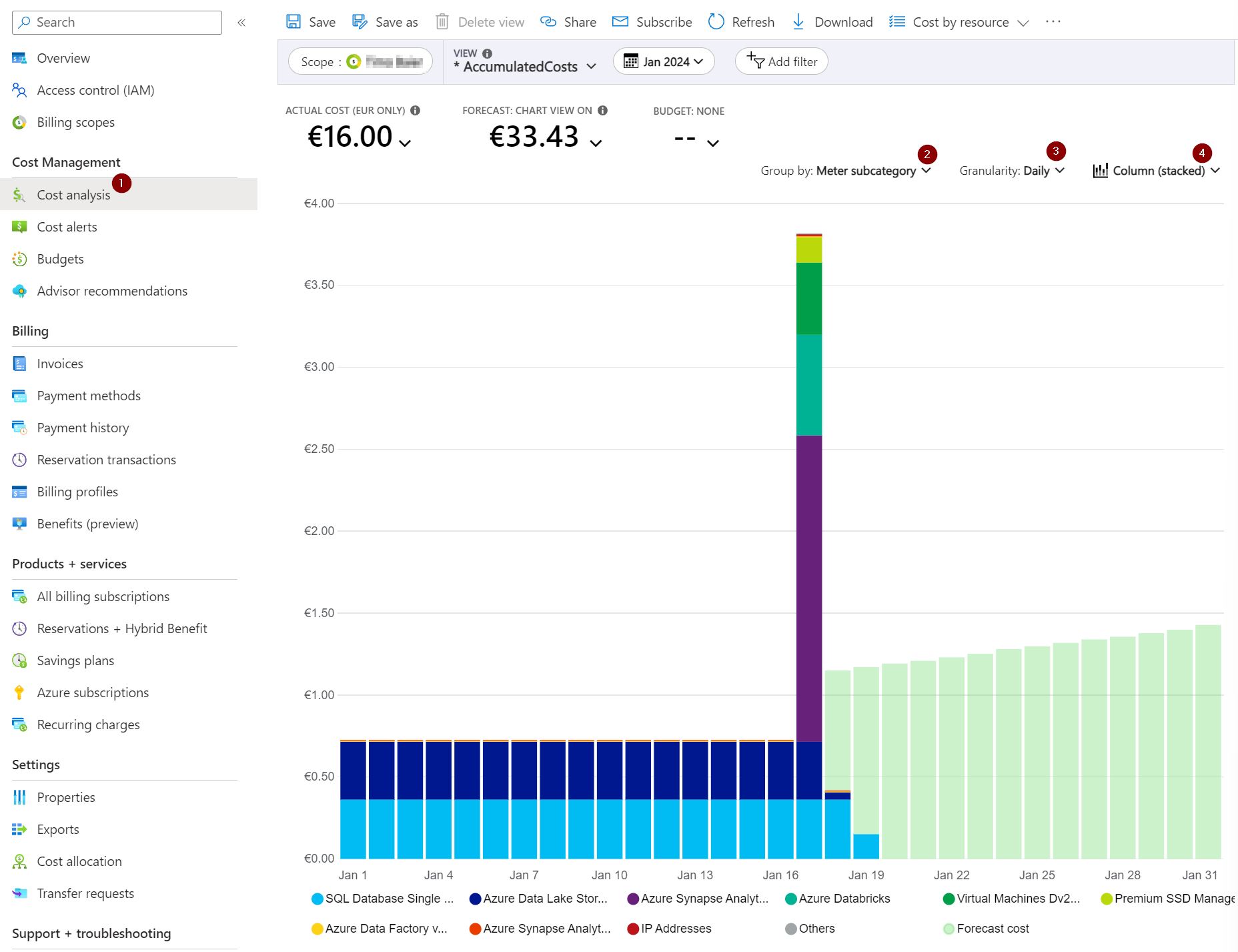

Tipp: aggregate your costs as column (stacked) by meter subcategory on a daily level to get a detailed view and feel for your costs!

Analysis

After the SHIR was updated and we ensured the pipelines could run with the new setup, I immediately looked at their cost management to identify where the rising cost came from.

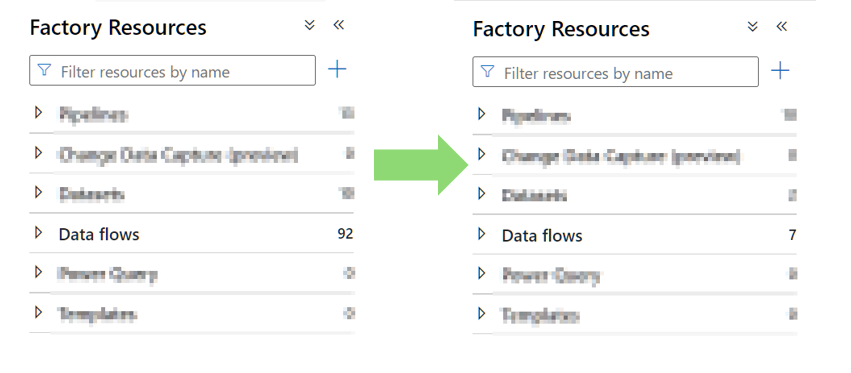

It didn‘t take me long to see that the high cost came from mapping dataflows, so I checked their ADF and saw that they were using the mapping dataflows to read CSV files, set the datatypes, and write them to an Azure SQL DB. Further, they used one dataflow per source- and sink dataset, resulting in 92 single mapping dataflows.

After I saw that, I explained the issue. I suggested a solution that stages the ingested data to parquet instead of CSV, keeping datatypes intact while iterating through a metadata-driven approach using lookups and copy data activities to avoid mapping dataflows (and the cost of cluster startup and execution time). The client was interested, and we started the week after with the implementation.

Solution

- Use Parquet instead of CSV for ingested data reduced not only the storage used on the data lake but also keeps datatypes from their extracted source tables

- Mapping Dataflows only for transforming data, not to copy data from source to sink!

- Copy Data activities embraced by a metadata-driven approach

- Rules to monitor Budgets in cost management and reduce monthly cost from 2,5 kEUR to ~0,5kEUR

Let’s do the math: 2.000 € * 12 = 24.000 € saved per year on this topic!

Learnings & Tips

- Monitor your costs actively; give them a budget!

- Don‘t hesitate to do a health check with an external partner occasionally!

- Especially cloud environments are inviting and easy to scale up and out – not always for the best

- Learning these topics is a process. It is not a shame to ask for help or a good sparrings partner from time to time

- Sometimes, it can be quite easy to reduce complexity and costs by investing a small amount of time